Graph Contrastive Learning

An explanation of node-level Graph Contrastive Learning

Graph neural networks have become very popular and often rely on learning representations for downstream tasks. This blog post will discuss graph contrastive learning, an unsupervised learning technique to construct representations from unlabelled data. The idea is based on slightly modifying the graphs so that the model can learn the notion of similarity. We will present the main ideas behind graph contrastive learning and reference the underlying literature. However, for a more thorough introduction, we recommend the reviews from Liu et al.

Introduction

Although the amount of available data has steadily increased, much of it is neither processed nor annotated. While hand labeling might be an option for a relatively small number of samples, it is usually infeasible in real-world scenarios. Self-supervised learning is a way to make use of large amounts of unlabelled data to learn meaningful representations by exploiting the implicit structure. Representations are a compact way to describe the most important aspects of data in many different domains. For example, representations can capture the essence of words

Self-supervised learning is most prominently used in computer vision tasks, such as image denoising

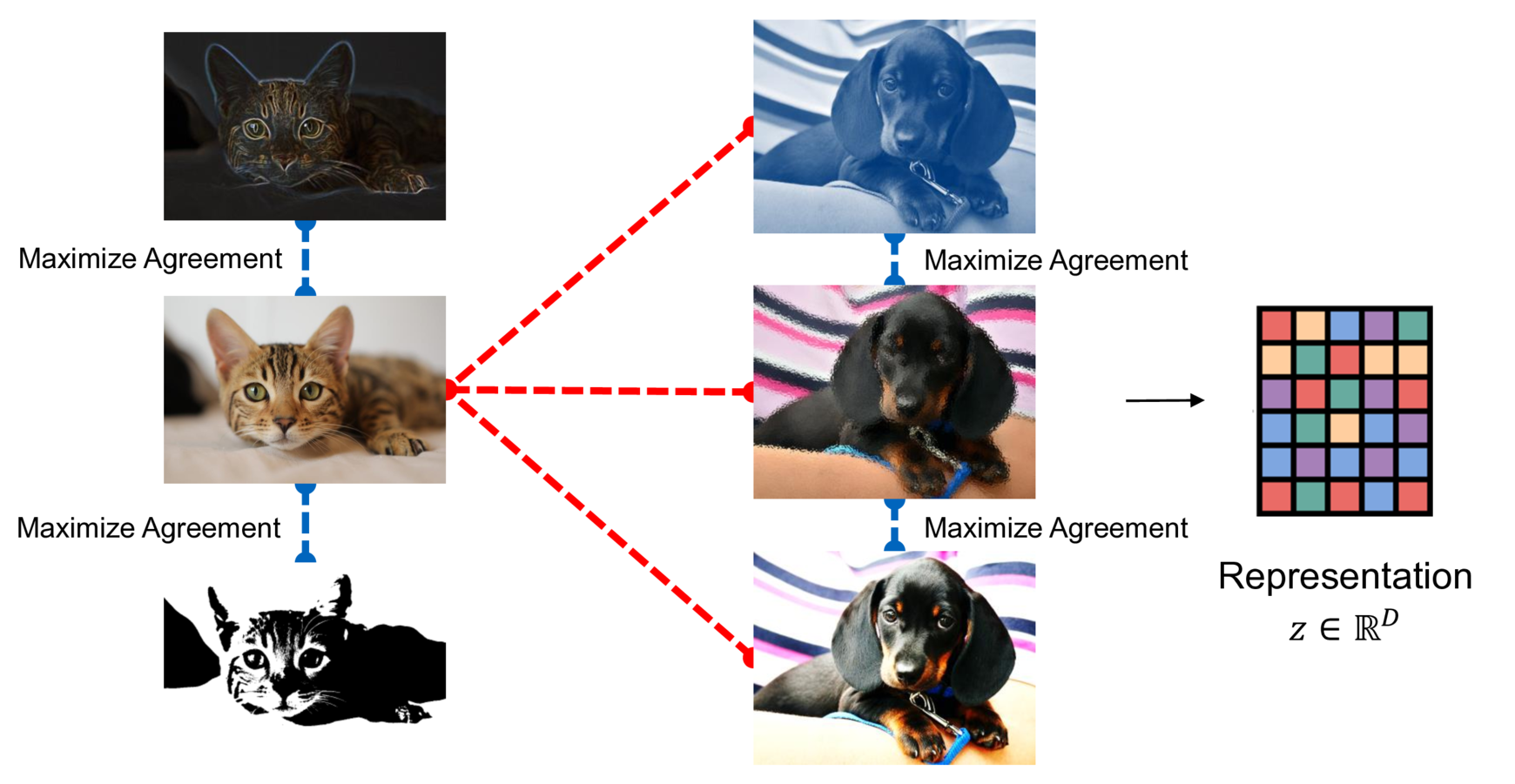

A simple example best explains the idea of contrastive learning. Consider pictures of cats and dogs, as seen in the figure above. We perform (minor) image augmentation techniques on the original pictures, such as changing the contrast or rotating and cropping the image. Although the pictures have been altered, the underlying semantic meaning does not change. All pictures of the cat are still pictures of the same cat, and all dog pictures still show the same dog. The idea behind contrastive learning is that augmentations of the same picture should have a similar representation. On the other hand, the difference in representation to all other images (such as pictures of dogs or other cats) should be as large as possible.

Although contrastive learning has originally been introduced for images, it can also be applied to different data types, such as graphs

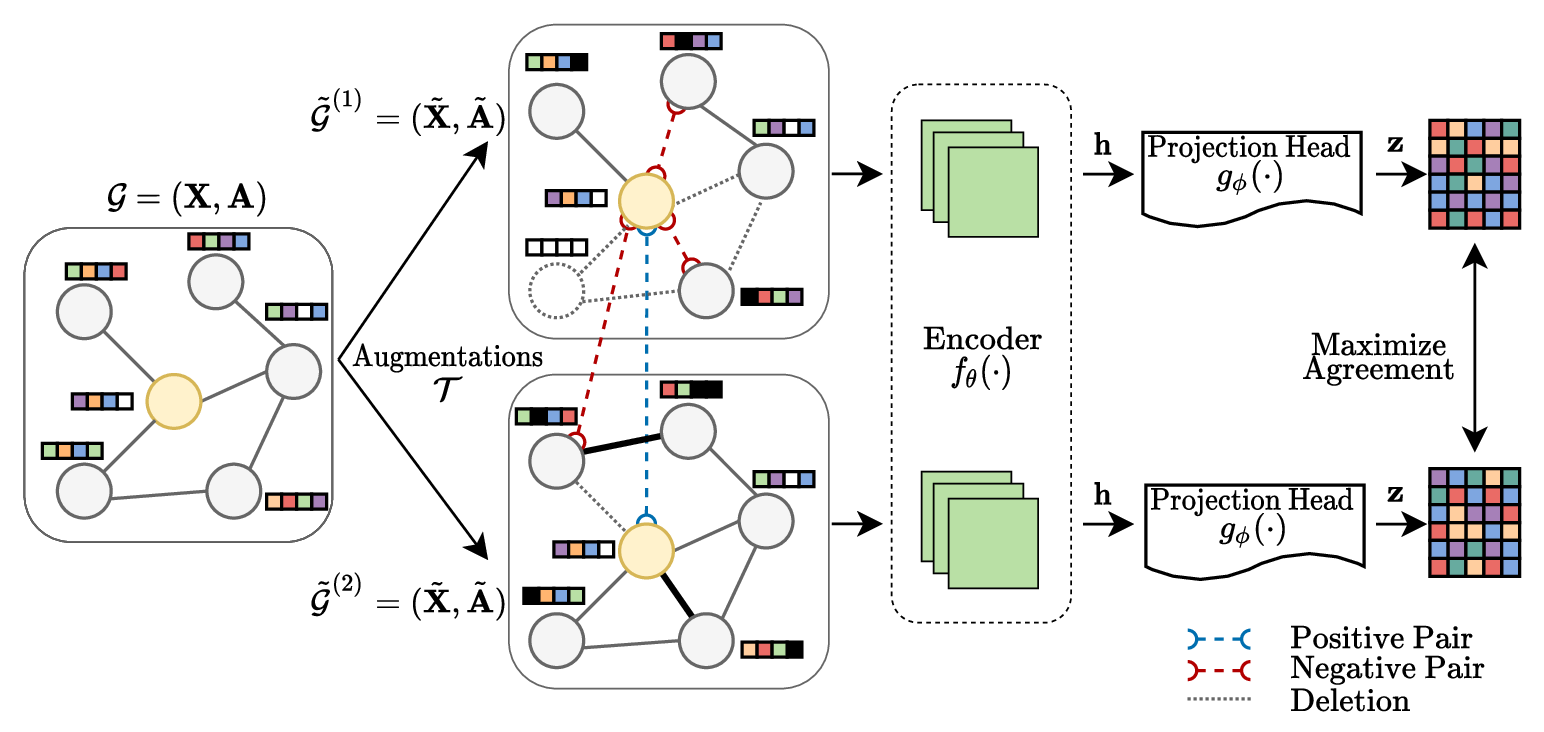

The figure above illustrates how GCL works and is the pipeline we will follow throughout this post. Instead of augmenting images, a graph is altered (edges or nodes deleted, features changed, etc.) to create different versions of the same graph. However, as with the images, the nodes should still have the same semantic meaning. A Graph Neural Network (encoder) then determines representations based on a loss that maximizes the agreement of similar nodes. This loss is defined so that the representation of the same nodes in different graph views should be pulled together. In the following, we will discuss these steps in more detail.

Graphs and Augmentations

Click here for the mathematical definition of graphs and their representations

We define a graph with \(N\) vertices as the core underlying object such that \(\mathcal{G} = (\mathbf X, \mathbf A)\). \(\mathbf{X} \in \mathbb{R}^{N \times F}\) denotes the feature matrix with \(F\) features and \(\mathbf A \in \mathbb{R}^{N \times N}\) is the adjacency matrix. While neural networks typically do not work well on this structure, Graph Neural Networks (GNNs)

Graphs are usually variable in size (i.e., the number of vertices and edges); hence, a fixed-size numeric representation can be valuable. Such representations are typically \(D\)-dimensional vectors in \(\mathbb{R}^{D}\), which compactly describe nodes or complete graphs.

In contrastive learning, the goal is to automatically find representations by leveraging a large amount of unlabeled data by generating contrastive views. In image contrastive learning, those views are created by augmentations that are usually straightforward. To name just a few, pictures can be cropped, rotated, or the contrast changed. However, graphs are structured fundamentally different than images, and operations that work on images, typically do not transfer to graphs. Depending on the data stored in the nodes or the edges of a graph, only a few augmentation techniques are applicable.

Graph augmentations form the basis of contrastive learning, as they provide the point of reference during: they determine which nodes are similar and thus, which representations need to be pulled together in the latent space. To formalize this, an augmentation function \(\mathcal{T}\) changes an original graph \(\mathcal G\) and creates different views \(\tilde{\mathcal G}\) by modifying the adjacency and feature matrix

\[\tilde{\mathcal G} = \mathcal{T}\left(\mathcal G) = (\mathcal{T}_{\mathbf{X}}(\mathbf X), \mathcal{T}_{\mathbf{A}}(\mathbf A)\right) \text{.}\]1. Graph Topology Transformation

Modifying the underlying topology is the most straightforward and intuitive way to augment a graph. Changing the topology means we delete or add edges and vertices but do not change the data they store

2. Node Feature Transformation

The second major way to agument a graph are feature-modifying augmentations which modify the feature matrix \(\mathbf X\) and with it the actual data stored. A very common and straightforward technique to augment the features is node feature masking

Depending on the concrete data stored for every node, adapting the features has drastically different capabilities. For example, consider a graph where every node stores an image. In such a setting, classical contrastive learning techniques, where images are rotated or the color space changed, are possible and likely lead to better results than primitive feature transformations.

3. Other Methods

Combining augmentations that change the topology and features is better than relying on only one of those types

Augmentations in graphs need to be hand-picked and fine-tuned. For every graph, different augmentations work better. Adaptive augmentations

4. Augmentation “Free” Methods

Some methods eliminate the need for augmentations altogether, such as graph soft-contrastive learning

Similarly, Wang et al.

Learning Representations

In the previous section, we discussed how to construct contrastive views with various augmentation techniques. With this wide range of different ways to generate contrasting views we can discuss how to learn representations. In the following, we will continue with the remaining parts of the pipeline: the actual models and how to train them. Now is a good time to look at the pipeline of GCL from before again to put this into context.

The Encoder and Projection Heads

The encoder \(f_\theta : \mathcal{G} \to \mathbb{R}^{N \times D}\) is the core part of the contrastive model and generates a representation \(\mathbf{h}_i\) for each node in the graph \(\mathcal{G}\). In principle, any model can be used to calculate the representations

In addition to the encoder, Chen et al.

During training, the projection head and the encoder, determine the representations \(\textbf{z} = g_\phi \circ f_\theta \left( \mathcal{G} \right)\) of all nodes in a graph. At inference, often only the encoder is used to create representation. This is because at inference, there is no need to “unlearn the augmentations”, because there will not be any applied.

Optimization

The encoder and projection heads need to be trained with a suitable loss to achieve the property that similar nodes have similar representations. While there are many possibilities to define the optimization target, we will focus on a minimization that only uses two augmented graph views produced by a single graph. This setting has been shown to yield good empirical results

where \(\mathcal{L}_{con}\) is a contrastive loss that takes the representations of two similar nodes (i.e., a positive pairs) \(i\) and \(j\) in two different views \(\tilde{\mathcal{G}}^{(1)}\) and \(\tilde{\mathcal{G}}^{(2)}\) respectively. The goal of any contrastive loss \(\mathcal{L}_{con}\) is to pull representations of positive pairs close to each other while pushing negative pairs apart. In practice, there are many different concrete contrastive losses to choose from.

Mutual Information

Approximating the mutual information is the basis for most contrastive learning losses. Mutual information describes the information that is shared by two random variables

\(D_{K L}\) is the Kullback–Leibler divergence and is a difference between two distributions. In this case, the joint probability \(p(\boldsymbol{x}, \boldsymbol{y})\) describes the shared amount of information, while the multiplication of marginal densities \(p(\boldsymbol{x}) p(\boldsymbol{y})\) describes their dissimilarity.

While mutual information forms the basis of most contrastive losses, calculating the exact value is notoriously difficult and hence, estimates of it are used in practice

Jensen-Shannon Estimator

The Jensen-Shannon Divergence (JSD)

\(s( \cdot, \cdot )\) is a similarity function and is typically the cosine similarity of the representations

The contrastive loss is computed for all similar pairs of nodes \(\left(\mathbf{z}_i, \mathbf{z}_j\right)\). For JSD, the similarity of this positive pair is then compared to all other negative pairs \(\left(\mathbf{z}_i, \mathbf{z}_j^{\prime}\right)\) sharing the node \(i\). This gives us the relative strength of this positive connection, similarly to as is done in the mutual information. We can also see that there is an expected value here, meaning that we would have to integrate over all negative pairs, which is usually difficult to calculate. In practice, the expected value is approximated as an average over all available negative pairs in the current views. For this, we can use negative pairs of nodes in the same graph or between nodes in different views.

Noise-Contrastive Estimator

Another widely used estimator

where \(\left(\mathbf{z}_i, \mathbf{z}_j\right)\) is again a positive pair, \(\mathbf{z}_j^{\prime}\) are negative pairs for the representation of node \(i\), and \(s( \cdot, \cdot)\) a similarity function. Pairs that are similar according to \(s( \cdot, \cdot)\) will then have a representation close in the latent space. Similarly to the Jensen-Shannon estimator, InfoNCE maximizes the relative similarity of positive pairs compared to their respective negative pairs. In many cases InfoNCE can outperform Jensen-Shannon estimator, but this effect diminishes with larger datasets

There is a variety of additional different losses available for graph contrastive learning such as Donsker-Varadhan objective

Discussion

Graph contrastive learning is a technique to learn representations in a self-supervised setting by exploiting the graph’s structure with augmentations to create contrastive views. Most work in the field is a direct extension of the image domain, and typically only empirical results are available. Extending algorithms to graphs is challenging, and there are still some open issues. For example, the usefulness of projection heads has only been examined in image contrastive learning by Chen et al.

As of writing, choosing the correct graph augmentations is difficult because they typically rely on (implicit) assumptions, such as homophily, which not every graph fulfills. A solid mathematical background or the question “What is the best augmentation function for my graph?” remains an open field of research. Most work in GCL relies on evaluating different augmentations on various datasets and empirically drawing conclusions from this

Although GCL has its issues and the performance varies drastically between use cases

Acknowledgements

Thank you Filippo Guerranti for our fruitful discussions and your help in revising this document.